Using SQLCMD to Automate Mirroring Databases to the Cloud

Interesting project I’ve been involved in this week – scripting the process of Mirroring On-Premise databases up into the cloud (on Windows Azure – not SQL Azure) for DR purposes.

Once the Windows Azure VM was built, we installed SQL Server 2012 SP1 to match our production systems and constructed the required mirroring endpoints. After that (given that I had quite a few databases to mirror) I set about generating a script to automate the process (I am lazy after all!). This is the result of it all – a fully functional mirroring script :-

— Setup Local Variables for the Database Mirroring

— Make sure the MirrorServer is the same SQL Server Version

— *OR HIGHER* than the PrincipalServer – otherwise the

— Database Restore will fail.

—

— Also make sure that the restore logical filenames and

— restore physical filenames are set appropriately from the

— RESTORE FILELISTONLY command….

:setvar SQLCMDLOGINTIMEOUT 60

:SETVAR PrincipalServer

:SETVAR PrincipalHost

:SETVAR PrincipalServerListenerPort

:SETVAR PrincipalBackupPath

:SETVAR MirrorServer

:SETVAR MirrorHost

:SETVAR MirrorServerListenerPort

:SETVAR MirrorRestorePath

:SETVAR MirrorDatabaseMDFPath “path to mirror ldf file”

:SETVAR MirrorDatabaseLDFPath “path to mirror mdf file”

:SETVAR Database2Mirror

GO

:ON ERROR EXIT

GO

SET NOCOUNT ON

— Connect to the Principal Server and Check the Database we

— intend to mirror is in the FULL recovery mode

:CONNECT $(PrincipalServer) -l $(SQLCMDLOGINTIMEOUT)

— Mirroring ONLY Supports the FULL recovery Model

PRINT ‘Checking RECOVERY Mode on Database $(Database2Mirror)’

GO

IF DATABASEPROPERTYEX(‘$(Database2Mirror)’, ‘Recovery’) != N’FULL’

ALTER DATABASE $(Database2Mirror) SET RECOVERY FULL

GO

— Now Backup the Database from the Principal and restore

— it onto the Mirror Server

PRINT ‘Backing Up Database $(Database2Mirror) On $(PrincipalServer) to $(PrincipalBackupPath)’

GO

— COPY_ONLY so as to not upset the backup LSN chain

BACKUP DATABASE $(Database2Mirror)

TO DISK = N’$(PrincipalBackupPath)\$(Database2Mirror).bak’

WITH COPY_ONLY, INIT, FORMAT

GO

PRINT ‘Backing Up Database $(Database2Mirror) Log On $(PrincipalServer) to $(PrincipalBackupPath)’

GO

— COPY_ONLY so as to not upset the backup LSN chain

BACKUP LOG $(Database2Mirror)

TO DISK = N’$(PrincipalBackupPath)\$(Database2Mirror).trn’

WITH COPY_ONLY, INIT, FORMAT

GO

— At this point, copy the FULL backup and the transaction log

— over to the Mirror Server (or use a UNC path)

— Alternatively, make sure the backup path and the restore path are the same

— and the UNC location can be seen by both principal and mirror

:CONNECT $(MirrorServer) -l $(SQLCMDLOGINTIMEOUT)

PRINT ‘ Test RESTORE FILELISTONLY to ensure we can see the backup’

GO

DECLARE @FileList TABLE

(

LogicalName NVARCHAR(128) NOT NULL,

PhysicalName NVARCHAR(260) NOT NULL,

Type CHAR(1) NOT NULL,

FileGroupName NVARCHAR(120) NULL,

Size NUMERIC(20, 0) NOT NULL,

MaxSize NUMERIC(20, 0) NOT NULL,

FileID BIGINT NULL,

CreateLSN NUMERIC(25,0) NULL,

DropLSN NUMERIC(25,0) NULL,

UniqueID uniqueidentifier NULL,

ReadOnlyLSN NUMERIC(25,0) NULL ,

ReadWriteLSN NUMERIC(25,0) NULL,

BackupSizeInBytes BIGINT NULL,

SourceBlockSize INT NULL,

FileGroupID INT NULL,

LogGroupGUID UNIQUEIDENTIFIER NULL,

DifferentialBaseLSN NUMERIC(25,0)NULL,

DifferentialBaseGUID UNIQUEIDENTIFIER NULL,

IsReadOnly BIT NULL,

IsPresent BIT NULL,

TDEThumbprint VARBINARY(32) NULL

);

DECLARE @RestoreStatement NVARCHAR(max)

DECLARE @BackupFile NVARCHAR(max);

DECLARE @logical_data NVARCHAR(max)

DECLARE @logical_log NVARCHAR(max);

SET @BackupFile = N’$(MirrorRestorePath)\$(Database2Mirror).bak’

— RESTORE FILELISTONLY FROM DISK = N’$(MirrorRestorePath)\$(Database2Mirror).bak’

SET @RestoreStatement = N’RESTORE FILELISTONLY FROM DISK=N”’ + @BackupFile + ””

INSERT INTO @FileList EXEC(@RestoreStatement);

SET @logical_data = (SELECT LogicalName FROM @FileList WHERE Type = ‘D’ AND FileID = 1)

SET @logical_log = (SELECT LogicalName FROM @FileList WHERE Type = ‘L’ AND FileID = 2)

:SETVAR LogicalData @logical_data

:SETVAR LogicalLog @logical_log

— This step will need to be altered depending upon the logical filenames within

— the backup file and the intended restore location

PRINT ‘RESTORE – ing the $(Database2Mirror) Database from $(MirrorRestorePath) to $(MirrorServer) with NORECOVERY’

RESTORE DATABASE $(Database2Mirror)

FROM DISK = N’$(MirrorRestorePath)\$(Database2Mirror).bak’ WITH

MOVE $(LogicalData) TO ‘$(MirrorDatabaseMDFPath)\$(Database2Mirror).MDF’,

MOVE $(LogicalLog) TO ‘$(MirrorDatabaseLDFPath)\$(Database2Mirror).LDF’,

REPLACE, NORECOVERY

GO

PRINT ‘RESTORE – ing the $(Database2Mirror) Database Log from $(MirrorRestorePath) to $(MirrorServer) with NORECOVERY’

GO

RESTORE LOG $(Database2Mirror)

FROM DISK = N’$(MirrorRestorePath)\$(Database2Mirror).trn’

WITH NORECOVERY

GO

— Now Initialise the mirroring partnership

PRINT ‘Now Initialising the Mirror from $(MirrorServer) (Mirror) to $(PrincipalServer) (Principal)’

GO

ALTER DATABASE $(Database2Mirror)

SET PARTNER = ‘TCP://$(PrincipalHost):$(PrincipalServerListenerPort)’

GO

:CONNECT $(PrincipalServer) -l $(SQLCMDLOGINTIMEOUT)

PRINT ‘Now Initialising the Mirror from $(PrincipalServer) (Principal) back to $(MirrorServer) (Mirror)’

GO

ALTER DATABASE $(Database2Mirror)

SET PARTNER = ‘TCP://$(MirrorHost):$(MirrorServerListenerPort)’

GO

PRINT ‘Tidying Up the Backup Files’

GO

DECLARE @DeleteTime DATETIME

SET @DeleteTime = DATEADD(MINUTE, -10, GETDATE())

EXECUTE master.dbo.xp_delete_file 0, N’\\RIDGIANDC1\Downloads’, N’Bak’, @DeleteTime, 1

EXECUTE master.dbo.xp_delete_file 0, N’\\RIDGIANDC1\Downloads’, N’Trn’, @DeleteTime, 1

PRINT ‘All DONE!!’

GO

— Finally, check it is all working

SELECT

DB_NAME(sd.[database_id]) AS [Database Name],

sd.mirroring_role_desc,

sd.mirroring_partner_name,

sd.mirroring_state_desc,

sd.mirroring_witness_name,

sd.mirroring_witness_state_desc,

sd.mirroring_safety_level_desc

FROM

sys.database_mirroring AS SD

WHERE

mirroring_partner_name IS NOT NULL

After the usual 30 minutes of figuring out how to move variables between T-SQL and SQLCMD, it all started working like a charm.

So…. Enjoy… Please feel free to use / dissect / re-write to whatever end you need.

If lost it you have, find it you should!

This is a very short blog as I’ve been a tad busy of late!….

I’ve been working with partitioning recently in a big Data Warehouse, and since this has involved file-groups, data-files etc.. and moving *lot* of data around, I wanted to make sure I knew where I’d put everything (both for partitioned and non-partitioned data)…

Enter my – Where is everything script:

SELECT

SCHEMA_NAME(ss.schema_id) AS [Schema Name],

OBJECT_NAME(db_ps.object_id) AS [Table Name],

db_ps.index_id,

CASE ISNULL(si.name, ‘0’)

WHEN ‘0’ THEN ‘No Indexes’

ELSE si.name

END AS [Index Name],

si.type_desc,

COALESCE(sfg1.name, sfg2.name) AS FileGroupName,

ISNULL(ps.name, ‘Not Partitioned’) AS [PartitionSchemeName],

db_ps.partition_number AS PartitionNumber,

db_ps.row_count

FROM

sys.partitions p

INNER JOIN sys.dm_db_partition_stats db_ps ON p.partition_id = db_ps.partition_id

AND p.object_id = db_ps.object_id

INNER JOIN sys.indexes si ON p.OBJECT_ID = si.OBJECT_ID AND p.index_id = si.index_id

INNER JOIN sys.objects so ON so.object_id = si.object_id

INNER JOIN sys.schemas ss ON so.schema_id = ss.schema_id

LEFT JOIN sys.partition_schemes ps ON ps.data_space_id = si.data_space_id

LEFT JOIN sys.partition_functions pf ON pf.function_id = ps.function_id

LEFT JOIN sys.partition_range_values pv ON pf.function_id = pv.function_id

AND p.partition_number = pv.boundary_id

LEFT JOIN sys.destination_data_spaces dds ON dds.partition_scheme_id = ps.data_space_id

AND dds.destination_id = p.partition_number

LEFT JOIN sys.partition_parameters pp ON pf.function_id = pp.function_id

LEFT JOIN sys.types t ON t.system_type_id = pp.system_type_id

LEFT JOIN sys.data_spaces ds ON ds.data_space_id=dds.data_space_id

LEFT JOIN sys.filegroups sfg1 ON sfg1.data_space_id = dds.data_space_id

LEFT JOIN sys.filegroups sfg2 ON si.data_space_id = sfg2.data_space_id

WHERE

so.type = ‘U’

AND si.index_id IN (0,1) — Heaps or Clustered Indexes

ORDER BY

[Schema Name] ASC, [Table Name] ASC, PartitionNumber ASC

Which does just the job for me….

SysInternal Process Explorer with SQL Server

Another SysInternals (www.sysinternals.com) tool I use quite a bit is the Process Explorer, which has just had a new release, taking it to V. 15.3. Process Explorer allows you to correlate CPU thread activity with the SQL Server threads. I find this a very quick and easy way to go from CPU utilisation into SQL and identify the query which is burning CPU. Now, this technique is not new – in fact the guys at SQLSkills (www.sqlskills.com) have blogged about this far better than I can – but…. I use this in anger, and it hasn’t let me down yet. Consequently, I find this a VERY useful tool in my troubleshooting kit bag.

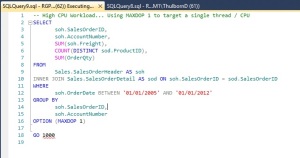

e.g…. First I create an AdventureWorks workload which hammers a single CPU due to the MAXDOP 1 setting on the query.

Now, with the “problem” at hand, I can open up Process Explorer and sort by CPU usage.

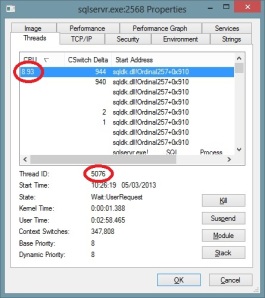

This can very quickly show me the SQL Server process is responsible for using CPU resources (which is the intention), but then right clicking on the SQL Server process and selecting “Properties” will give you a window such as this:

The threads tab shows you the Operating System level threads which are running within the SQL Server process, complete with the ability to sort by thread utilisation and the Thread Id which is actually running the user query.

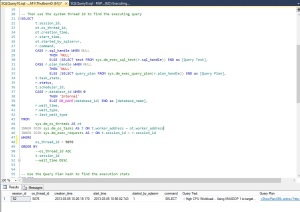

Armed with this information, we can query SQL Server itself using:

To see all the details of the query which is causing the CPU load. These details can expose everything about the query, from the actual statement, the plan, the connection details – all the usual goodness from the SQL Server DMVs. With the Process Explorer tool and SQL Server coupled in such a way, finding the full details about a hard hitting query takes less than a minute. Happy Days!..

WinDbg with SQL Server

Background:

Having been handed a broken SQL Server environment from one of our customers, I had to start some serious debugging to figure out what was causing the SQL Server process to get terminated and produce memory dumps.

Setting Up:

First off was getting WinDbg (the Microsoft Windows Debugger) up and running as described here: http://blogs.msdn.com/b/tess/archive/2005/12/05/associate-windbg-with-dmp-files.aspx

Second up, setting up the symbols I would need for the debugging session by using the symchk.exe tool to download all of the symbols for the SQL Server build in question using:

“C:\Program Files\Debugging Tools for Windows\symchk.exe” /r “C:\Program Files\Microsoft SQL Server\MSSQL11.MSSQLSERVER\MSSQL\Binn\*” /s SRV*”C:\Program Files\Debugging Tools for Windows\symbols”*http://msdl.microsoft.com/download/symbols

Finding the Problem:

So, right click and “Debug this Dump”….. Now… I’m not a hard-core developer, or a debugging expert, so I have a limited set of commands I know which help me to do the task at hand…. !analyse -v is probably one of the best, as this sets the debugger to work finding out what caused the exception. After that, a simple ‘k’ to drop out the stack at the point the exception occurred… Usually this will also list the DLL in question where the exception occurred….

My Problem:

For me, the DLL generating the exception was from a 3rd Party linked server which had been configured to run “in process” – i.e. inside the SQL Server memory space. When it hit an exception, down went SQL Server.

Solution:

SQL Server’s linked server providers have an option to run “in process” or “out of process”. This is a simple tick box within the Provider Options and controls where the DLL gets loaded as it is needed. I was able to move the provider outside of the SQL Server memory space (so no more crashes) and also tell the customer which DLL was causing the issue and who they needed to go and “have a chat” with. Happy customer 🙂

Linked Server Mayhem…

Background:

I recently encountered an issue with an ETL process pulling in data from an Excel Spread-Sheet for use within an SSAS cube. The ETL process uses the Microsoft.ACE.OLEDB.12.0 provider which opens up the SpreadSheet on a remote UNC share to read the data into a temporary table.

The Problem:

Seemingly randomly, the ACE Provider would crash and leave an open transaction running with no hope of ever completing. The SQL Server ‘Kill’ command would mark the transaction and the session as “Killed / RolledBack”, but the session would still be present, and the SpreadSheet would still be locked and inaccessible.

The Solution:

TCPView (www.sysinternals.com) seems to help out significantly here. Given that there doesn’t seem to be any way of killing the session (and hence freeing up the SpreadSheet) without re-starting SQL Server, and SQL Server is sitting waiting for a response through the linked server which is never going to arrive, the options are a little limited.

It’s not a walk in the park though – TCPView and SQL Server’s sys.dm_exec_connections DMV don’t seem to offer a direct means of correlating which session / connection and which TCPView entry is the offending connection, so a little bit of consideration (guesswork) is in order – but given that the alternative is to re-start an active production SQL Server instance, I know which one I prefer!

Recent Comments